Projet de machine learning

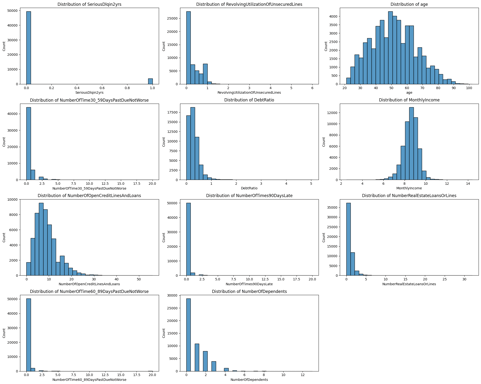

I started by analyzing the dispersion of the data, noticing a significant underrepresentation of some categories. To correct this imbalance, I decided to use an oversampling technique, thus ensuring a better representativeness in our dataset. I did not opt for a linear classification in this Kaggle project because the data presented a complexity and non-linear interactions between the variables that could not be adequately captured by a linear model.

Discovering the dataframe

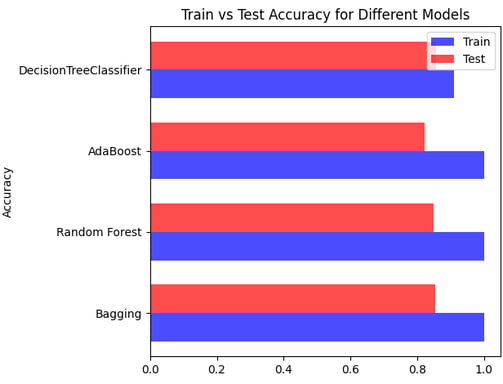

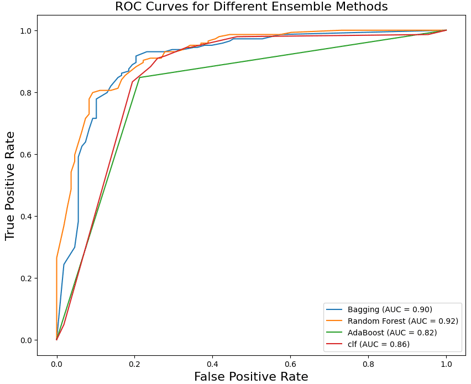

I used four tree-type models: Bagging, Random Tree, AdaBoost, and a “classic” decision tree. While each model performed well, I observed slight differences between the test and training results. These differences, while small, suggested varying levels of overfitting or underfitting depending on the model.

Decision trees

Having observed discrepancies between the test and training data in the four tree models used (Bagging, Random Tree, AdaBoost, and classic decision tree), I decided to use ROC (Receiver Operating Characteristic) curves to refine my choice of model. The ROC curves, by illustrating the true positive rate versus the false positive rate at different classification thresholds, allowed me to more precisely evaluate the performance of each model.

Study of the results

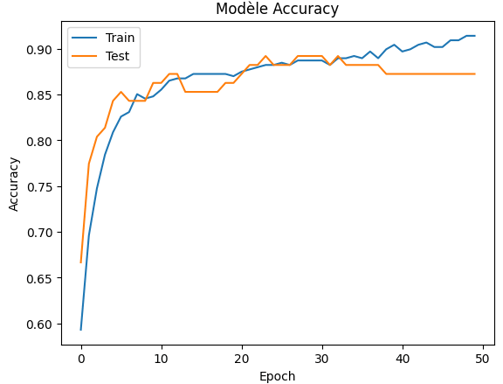

Having some time left during the competition, I used a neural network designed with TensorFlow Keras, composed of three layers. The first layer, with 32 neurons and a 'relu' activation function, serves to capture non-linear relationships between variables. The second layer, smaller with 8 neurons, continues this processing by reducing the dimensionality, while retaining the important features. Finally, the third layer, with a single neuron and a 'sigmoid' activation function, produces the final output, classifying the data into two categories. This network was trained to optimize binary classification, using the binary crossentropy loss function and measuring accuracy as a key metric.

Neural network

Conclusion

This Kaggle project was a success, earning me 3rd place in the competition. It is a testament to the effectiveness of my analytical approach and my ability to apply advanced machine learning techniques. For those interested in a more detailed understanding of my methods and analyses, my full report is available below, providing an in-depth look at my work and the strategies that led to this success.